Spark SQL DataFrame Basic

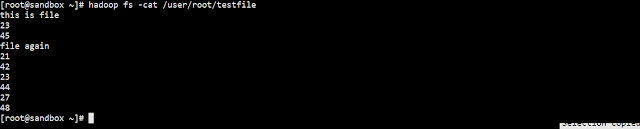

Spark SQL is a spark module for structured data processing. User can execute sql queries by taking advantage of spark in-memory data processing architecture. DataFrame :- Distributed collection of data organized into named columns.It is conceptually equivalent to table in relational database. Can be constructed from Hive,external database or existing RDD's Entry point for all functionality in Spark SQL is the SQLContext class and or one of its descendants. There are two ways to convert existing RDD into DataFrame. 1. Inferring Schema 2. Programmatically Specifying Schema We will see example for both step by step. Inferring Schema :This is done by using case classes.The names of the arguments to the case class are read using reflection and become the names of the columns. Below is sample code: import org.apache.spark.SparkConf import org.apache.spark.SparkContext import org.apache.spark.sql.SQLContext import org.apache.spark.sql.DataFrame ...