Distributed Cache in Hadoop

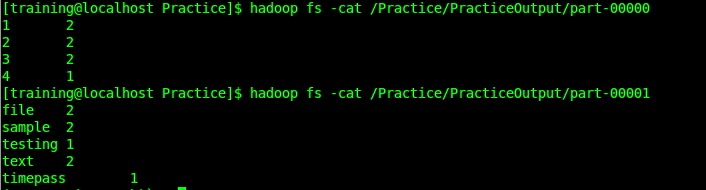

Side Data Distribution : Side data can be defined as extra read-only data needed by a job to process the main dataset. The challenge is to make side data available to all the map or reduce tasks (which are spread across the cluster) in a convenient and efficient fashion. Distributed Cache : It provides a service for copying files and archives to the task nodes in time for the tasks to use them when they run. Lets take a standard word count example with distributed cache . I have a files article.txt placed in HDFS files system. While running a word count example , I will read article from this file and ignore in word count. Sample Cache File Cache files contains three article as 'a','an','the'. Input File for Word Count: Here is a Program: import java.io.BufferedReader; import java.io.IOException; import java.io.InputStreamReader; import java.net.URI; import java.util.HashSet; import java.util.StringTokenizer; import org.apache.hadoo...