Welcome back on Kubernetes tutorial series.

In today's tutorial , we will talk about using persistent volumes in kubernetes and a quick demo of setting up persistent volume on your local machine.

Persistent volumes are useful for stateful application development where you always keep record of last information processed or maintain database transactional status.

We all know by default if your Pod re-starts then you loose current status of your application which may not be applicable in every scenario.

In this example, we will provision PersistentVolume(PV) and PersistentVolumeClaim using default Storage class provisioned by hostPath.

There are multiple types of Storage classes supported by Kubernetes. Complete list can be found by below link.

https://kubernetes.io/docs/concepts/storage/storage-classes/

Github Location : https://github.com/shashivish/kubernetes-example/tree/master/pvc-example

Pre-requisite : Minikube Cluster

On a high level , we will be following below task in this tutorial.

- Create Deployment , Volume and Persistent Volume Claim Files

- Configure Deployment to Access Volume Claim

- Verify data written on Disk

- Delete and Re-create Pod

- Verify data again

Alright let's begin.

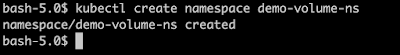

Create a Namespace:

kubectl create namespace demo-volume-ns

Create PersistentVolume & PersistentVolumeClaim

kubectl apply -f pvc.yaml

You might notice in configuration , I am using /Users/shashi/data location from my machine with 2Gb of storage and hostpath default storage class.

You should update path as per you machine local path.

I have defined claim name as demo-volume-claim which i will be using in deployment file to make storage claim.

Simply verify you PersistentVolume and PersistentVolumeClaim in properly created.

kubectl get pvc -n demo-volume-ns

kubectl get pv -n demo-volume-ns

Create Deployment With Volume Claim

Important configuration in deployment kind is to look for volume where I am requesting for data-volume-claim and application will mount /data volume hostpath.

Just for our testing , I am echoing "Hello Youtube " in file hello.txt for verification purpose.

kubectl apply -f deployment.yaml

Now make sure your pods are successfully created and in running state.

kubectl get pods -n demo-volume-ns

Verify Data Written

kubectl exec -it demo-volume-app-859f76dfcf-klrrp -n demo-volume-ns -- cat /data/hello.txt

we can see from above result , data is written in container at /data/hello.txt location which is mounted on our host machine.

Remember we used /Users/shashi/data location for our Persistent volume from our machine. Let's quickly check what we have there.

We can see hello.txt is present locally since we mounted container directory on our local machine path.This kind of behaviour is application to different kind of storage class we use i.e. AWS , Azue , GCP etc.

Verify Data Persistence

Now we will add new files to local direct /Users/shashi/data and see if I am able to access within container or not.

echo "This is new File" > /Users/shashi/data/newFile

Now check inside container directory.

kubectl exec -it demo-volume-app-859f76dfcf-kgchb -n demo-volume-ns -- ls /data/

Delete running pod and let it get re-created.

kubectl delete pod demo-volume-app-859f76dfcf-kgchb -n demo-volume-ns

Notice that after deleting a pod , a new pod has been created. Lets see we are able to retrieve files which were present on old pods.

kubectl exec -it demo-volume-app-859f76dfcf-48dvf -n demo-volume-ns -- cat /data/newFile

Awesome ..!!! We can observe that newFile is present in newly created pod.

Conclusion : PersistentVolume are useful while working with stateful application. It can restore your data even if you Pod restarts in un-usual circumstances.

Helper Command :

To check if you have respective storage class available in your namespace.

kubectl get storageclasses --all-namespaces

Have a good day..!!

Keep Learning .!! Keep Sharing ..!!

best devops training institute in hyderabad

ReplyDelete